It must have been Google Alerts that send me a link to the HOTOS 2007 (Hot Topics in Operating Systems) paper by Tal Garfinkel, Keith Adams, Andrew Warfield, and Jason Franklin called Compatibility is not Transparency: VMM Detection Myths and Realities. This paper is slightly less than a year old today, so it is old by blog standards and quite recent by research paper standards. It deals with the interesting problem of whether a virtual machine can be made undetectable by software running on it — and software that is trying to detect it. Their conclusion is that it is not feasible, and I agree with that. The reason WHY that is the case can use some more discussion, though… and here is my take on that issue from a Simics/embedded systems virtualization perspective.

Their main important assumption is that the VMM cannot be tailored to avoid detection by any particular piece of software, but has to be sufficiently like the real thing to fool something the first time it appears. They discuss from the perspective of virtualization solutions like VmWare that aim at high performance before all else. The virtual PCs generated by VmWare, Parallels, KQemu, and others are all compatible with physical PCs — run the same software — but are not at all identical in detail. So they are not transparent in the words of the paper. This means that they are quite easy to spot.

There are some holes in functional differences that VMMs can quite easily plug. The paper shows how you can get a different-sized TLB (compared to the physical hardware), for example, from interference from the VMM. This can obviously be fixed in the VMM, at a cost in performance. The reason such differences are there is that VMMs are optimized for performance at almost any cost. As long as the requisite operating systems run as they should, the VMM is fine even if it is does actually correspond to any particular existing physical machine. This is a testament to the tolerance of modern operating systems towards their hardware. Basically, any OS that probes hardware and discovers what is there will work fine as long as the (virtual) hardware exposes devices that it can recognize. This is quite different from the 1970s or 1980s where an OS would definitely expect a very particular hardware setup with very peculiar timing to run at all. Thus, making a VMM totally identical to some physical machine is a waste of effort and performance.

Paravirtual approaches like Xen and what Sun has with Niagara and IBM on their Power servers, where the OS is rewritten by having drivers for a purely virtual hardware/software interface is an obvious generalization from the VmWare compatibility approach. Compatible versus transparent/invisible virtualization is really only an issue in the x86 PC world, since all other datacenter architectures are virtual by definition and all operating systems work towards a standard virtual layer. In such an environment, I have hard time seeing that the question posed in the paper does even make sense. You are always virtualized, period.

Embedded Virtual Platforms

Anyhow, back to the main thread. There is still a large set of targets where transparency and compatibility are of interest. x86 PCs is one such target, it is an interesting question for older architectures (Alpha, Vax, Sun and IBM in older generations). In particular, it is an important topic for embedded systems where you want to use virtual or simulated approaches to develop and test software. As part of that software development process on a virtual machine, you could potentially be examining malware of various kinds. A good not-too-hypothetical example are mobile phone viruses.

If we look at embedded system virtual platforms, the functionality of the simulator is usually more complete and more like a particular physical machine than what a VmWare-style datacenter VMM. This is partially due to embedded software stacks tending to be a bit pickier about what they run on, and partially due to the simple fact that the goal really IS to expose the hardware/software interface of a particular piece of hardware as closely as possible. Also, since this is usually cross-targets (Power Arch on x86, for example), there is no performance gain from using features of the host directly. So items like TLB counts, memory layout, memory content, flash memory programming, etc. are all going to be functionally identical to the physical machine.

Timing is Key

Thus, just like for a patched VmWare-style VMM as discussed in the article, the main attack vector remains timing.

The best way, according to the authors, to spot a VMM is to look for timing differences compared to the behavior on normal hardware. Despite the inherent variability of typical hardware, there are cases where VMMs by necessity vary detectable amounts. I would say this means a factor five or more over many tests of a case.

The authors discuss whether tools like Virtutech Simics could be used to overcome this problem in the context of x86 PCs. I think the main argument for something like Simics for this purpose is that by simulating the entire hardware platform and providing all timing measurements from a strong virtual time base, you do not see the types of time differences that can be used to detect a “normal” VMM. However, since the paper considers Simics and SimNow (from AMD) to be about ten times slower than native hardware, you can always detect them using a non-local time source. That is likely true. But it less obviously true for an embedded target where the simulator running on a fast PC might well be just as fast as the target.

The Multicore Timing Attack

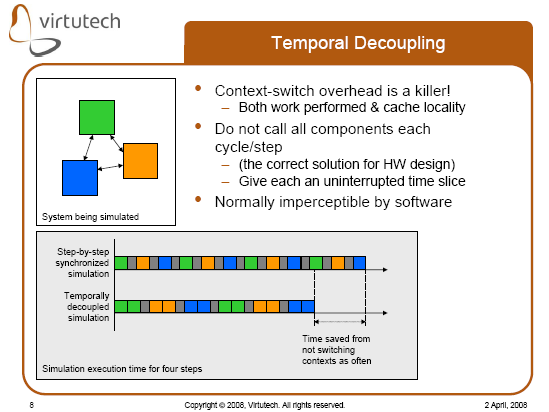

A more intriguing aspect of embedded virtual platforms that could be used to detect virtual platforms is how simulation of multicore machines is handled. For performance reasons, simulators use temporal decoupling, where each virtual processors is run for a “long” time slice before switching to the next. We discussed the effect of this in a recent presentation at the multicore expo (link to previous blog post), and some of that data is worth repeating.

Here is a slide explaining how temporal decoupling works:

So what does this mean in practice for detecting that you are running in a virtual machine?

It means that the communication latency between parallel threads is proportional to the size of the time slicing. If you have two threads progressing in parallel doing spinlocks, on a real machine they will be stealing the lock from each other all the time. On a temporally decoupled simulator, you will rather see a behavior where you can take the lock and then recapture it a few times before missing it. This effect was captured by a simple test program that we wrote, and the data is shown in the slide below:

The program here is running two threads in parallel, updating a shared variable, with three types of locking for the accesses:

- No locking at all

- A local lock to each thread being used (“fake locking”)

- A proper lock

The interesting behavior is the execution time of the program for each of these locking styles. Obviously, running with no lock is the fastest, and with proper locking the slowest. The relative speed of these is the factor to consider. On real hardware, this program observes a very steep increase in execution time when using proper locking. On the simulator, as seen above, the difference in execution time between fake locking and proper locking is significantly smaller when using a long time slice compared to when using a short time slice. The behavior on physical machines is much more like that observed at time slice lengths of ten than that at time slices of 10000.

Normally, a multiprocessor simulator with any ambition to be fast has to use a time slice of 1000 or more. Thus, detecting that you are running inside a simulator is quite simple. If the outside world time seems right, check if you can see strange timing behavior when using locks. Since high speed requires a long time slice, you cannot have both correct real-world timing and a large performance difference. And on the other hand, if the behavior with locking seems reasonable, you should check the real-world time — as a simulator with a short time slice will be way slower than the real world.

The paper authors note a similar aspect in desktop/server x86 VMM detection. They discuss “performance cliffs” that appear when doing “unusual” things. For example, VmWare is engineered assuming a minimum use of self-modifying code. Performance is much worse if you use it extensively, and this can be used to detect VmWare quite effectively. This effect is quite similar to the time slice effect in embedded virtual platforms.

Hope you enjoyed this fairly long rant. And we have not even begun exhausting the contents of this topic… luckily, these discrepancies only very rarely impact the usefulness of virtual platforms. Since most software even on an embedded system does not care about detailed timing like this. In the example above, we still see the lock contention. So we know that we are getting an increase in execution time from the lock. Only not a complete picture of what it means in absolute terms. We will still find missing locks and overused locks.

Aloha!

Very interesting, and a good example of how hard it is to create a virtual environment that can’t be distiguished from the real system.

It might be worth mentioning that Skype (the application) does a very good job at figuring out if it is running in a virtual machine (actually under a debugger). For some scary code-hiding and env-detection, see:

http://www.blackhat.com/presentations/bh-europe-06/bh-eu-06-biondi/bh-eu-06-biondi-up.pdf

Also in a discussion like this I would like to point at Rutkowskas Blue Pill, which is trying to sneak a trojan *under* the system by introducing a hypervisor and placing the target system in it.

http://theinvisiblethings.blogspot.com/2006/06/introducing-blue-pill.html

Old but still very good.

The Biondi presentation was quite interesting. Skype goes to a very long length to protect itself, but from what I could see it would be pretty well defeated by an enclosed virtual machine. Since Skype cannot reasonably crash itself when lacking a network connection, it cannot defend itself against being stepped by a system that does not change its code and that has complete control over timing. The debugger detection that Skype contains is limited to a debugger running within the same OS as Skype, it will not stop a virtual machine used as a debugger.